Expert Interviews

- University Reviews

- Career Guide

Video Counseling

Video CounselingImportant Facts

- Ask any Question - CV Forum

Top 15 Research Papers on Generative AI (Real-World Cases

Komal Jain Aug 5, 2025 1.1K Reads

As technology has rapidly grown, along with it, the use of AI is also increasing. In our day-to-day life, we use AI in almost everything. On one hand, AI is used in various fields, including image recognition, natural language processing, and more, and on the other hand, GenAI is used for generating new content, including text, image, and more. In the upcoming years, the demand for GenAI will touch the sky, and if you pursue a course in the same, then you will get various excellent opportunities for sure, with a higher salary package.

However, as we know that the demand for Gen AI will grow more after five years, but you can get a good position in the corporate world with the highest-paying jobs, then you can opt for an online DBA(Doctorate of Business Administration). This course is offered by various foreign top-ranked universities that will also be valid in India. Once you complete the course, you will get the Dr. title in your name.

However, as we know that the demand of Gen AI will grow more after five years, but you can get a good position in corporate world with the highest-paying job then you can opt for an online DBA(Doctorate of Business Administration).

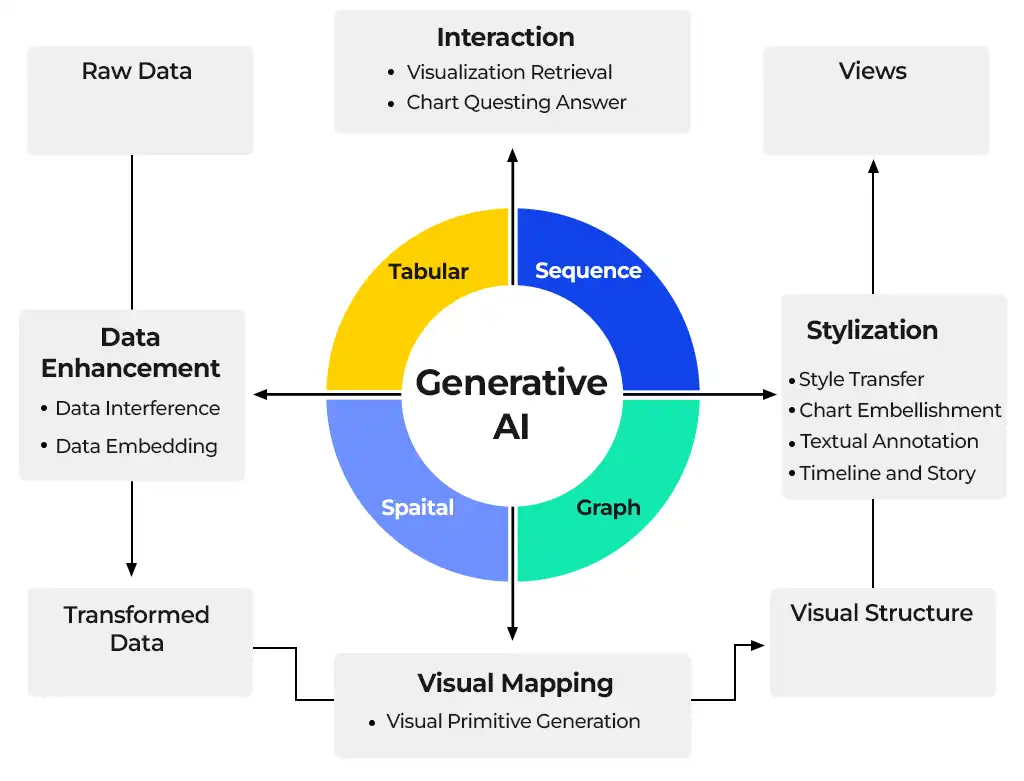

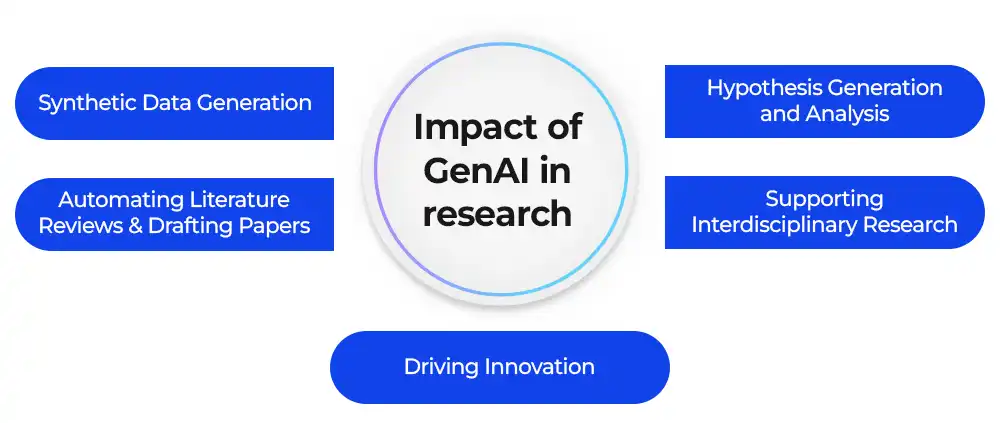

Before pursuing GenAI Engineering, you must know about its research papers. GenAI research papers are scholarly articles that help you in exploring the field of Generative Artificial Intelligence and its multiple applications, models, and implementations. These papers will help the students in grabbing the detailed information on how GenAI can generate new content just like text. To make it easy for the students, we have mentioned the top 15 research papers on GenAI that help them know about the research papers in detail.

Top 15 Research Papers on GenAI

Generative AI (GenAI) has rapidly evolved from an academic curiosity into a transformative force across industries. It powers everything from text completion and image generation to music composition and code synthesis. Behind this revolution lies a series of groundbreaking research papers that have paved the way for today’s advanced models like GPT-4, DALL·E, and Stable Diffusion.

1) Generative Adversarial Networks (GANs)

- Authors: Ian Goodfellow et al. (2014)

The paper presented GANs to the world for the first time, a system where two neural networks, a generator and a discriminator, engage in a minimax game. The generator attempts to produce realistic outputs, while the discriminator tries to classify between real and fake data.

- Impact: VAEs enabled deep generative modeling and pioneered probabilistic generative models that learn flexible latent variable distributions. Applications include image compression, image generation, and text-to-image synthesis.

2) Auto-Encoding Variational Bayes (Variational Autoencoders)

- Authors: Kingma & Welling (2013)

VAEs integrate ideas from deep learning and Bayesian inference to support probabilistic generative models that learn flexible latent variable distributions.

- Impact: Introduced unsupervised learning methods and gave a stable model to produce data such as digits, faces, and so on. VAEs have extensive applications in health and anomaly detection.

3) Attention Is All You Need (Transformers)

- Authors: Vaswani et al. (2017)

This revolutionary paper introduced the transformer architecture, making recurrence or convolutions unnecessary for sequence modeling by leveraging self-attention mechanisms.

- Impact: Facilitated parallel training and scalability, setting the stage for language models such as BERT, GPT, and T5. It is the foundation of contemporary GenAI.

4) GPT-3: Language Models Are Few-Shot Learners

- Authors: Brown et al. (2020)

GPT-3 tested the limits of model scale with 175 billion parameters. It proved that large language models can do lots of tasks with little fine-tuning through few-shot, one-shot, and zero-shot learning.

- Impact: GPT-3 popularized LLMs, driving products such as ChatGPT and Copilot. It demonstrated the promise of learning via prompts.

5) Diffusion Models Outshine GANs in Image Synthesis

- Authors: Dhariwal & Nichol (2021)

This work reinvigorated diffusion models, illustrating that they could surpass GANs in both image quality and diversity by iteratively denoising images.

- Impact: It caused a dramatic increase in the application of diffusion models to generate photorealistic images and affected models such as Imagen and Stable Diffusion.

6) DALL·E: Image Generation from Text

- Authors: Ramesh et al. (2021)

DALL·E integrated transformers with VQ-VAE-2 to produce high-resolution images given text prompts.

- Impact: Crossed language and vision, enabling open-domain, text-to-image synthesis. It generated curiosity in multimodal learning as well as creative AI.

7) CLIP: Learning Transferable Visual Models from Natural Language Supervision

- Authors: Radford et al. (2021)

CLIP learns visual concepts from natural language supervision by aligning text and images in a common embedding space.

- Impact: Facilitated zero-shot learning across vision tasks and paved the way for aligning images and text in models such as DALL·E and Stable Diffusion.

8) Image: Photorealistic Text-to-Image Generation

- Authors: Saharia et al. (2022)

Google’s Imagen uses large pretrained language models with diffusion for generating images from complex text descriptions.

- Impact: Surpassed DALL·E in benchmarks, highlighting the role of powerful language priors in vision generation.

9) Stable Diffusion

- Authors: Rombach et al. (2022)

Stable Diffusion uses latent diffusion models to compress images into a lower-dimensional space before generation.

- Impact: Democratized AI art by being open-source and light enough to be executed on consumer GPUs. Facilitated mass creativity and commercial uses.

10) PALM: Scaling Language Modeling with Pathways

- Authors: Chowdhery et al. (2022)

Pathways Language Model (PaLM) scaled up to 540B parameters with sparse activation and achieved state-of-the-art performance on all NLP benchmarks.

- Impact: Demonstrated the power of multi-task learning and proposed efficient scaling paradigms.

11) LAMDA: Language Models for Dialogue Applications

- Authors: Thoppilan et al. (2022)

LaMDA was centered on open-domain conversation, allowing for more natural and factual discussions.

- Impact: Had an impact on chat-specific model development, where safety and groundedness are prioritized in conversational agents.

12) CodeX: Generative Pretraining for Programming

- Authors: Chen et al. (2021) (OpenAI Codex)

Codex is driving GitHub Copilot and assisting with code synthesis, translation, and completion through generative modeling of source code.

- Impact: Spurred the productivity of developers and brought GenAI into software engineering practice.

13) BLIP-2: Bootstrapped Vision-Language Pretraining

- Authors: Li et al. (2023)

BLIP-2 facilitates lightweight image-to-text models with bootstrapped pretraining techniques and model fusion.

- Impact: Enhanced vision-language model efficiency and set the stage for scalable multimodal applications.

14) Flamingo: Visual Language Models with Few-Shot Learning

- Authors: DeepMind (2022)

Flamingo combines text and vision through a multimodal transformer and facilitates few-shot learning for visual question answering and captioning.

- Impact: Set new boundaries in sample-efficient multimodal learning and impacted models such as Gemini and GPT-4V.

15) Sora: Video Generation with Diffusion Transformers

- Authors: OpenAI (2024 Preview Paper)

Although yet to be publicly released, Sora is a significant advance in generative video modeling with transformers and diffusion.

- Impact: Demonstrates the future of GenAI, realistic, coherent, and editable video generation from prompts.

Final Thoughts: The GenAI Landscape Ahead

These 15 research articles document an obvious path from generating static images and completing text to the production of images, text, code, and video through multimodal and interactive systems with impressive realism. What links all these successes is scale, data, architecture, and alignment, and while we have made significant progress, the future path will be about improving factual accuracy, mitigating bias, and controllability.

As we develop foundation models with greater general intelligence, these main research contributions will give you a better understanding of how GenAI operates and its potential directions.

An Online DBA: A Road That Will Take You To A Successful Career

An online DBA(Doctorate of Business Administration) is a doctoral degree program that is especially designed for working professionals who want to advance their skills and also want to gain a higher position. If you are a working professional and want to enhance your skills and knowledge to earn a higher salary, then a DBA will be the right choice, and to take this course you do not need to leave your job as it is available online. If you are thinking that it will be valid in India or not, then yes this program is valid in India. An online DBA is accredited with WASC and it will be valid in India and other countries.

Here is the list of prestigious universities that are offering this course are mentioned below for reference.

|

University |

Program |

Fees (INR) |

|

DBA Online |

₹6,00,000 |

|

|

DBA Online |

₹8,14,000 |

|

|

DBA Online |

₹8,12,500 |

|

|

ESGCI International School of Management Paris Online DBA Program |

DBA Online |

₹8,14,000 |

|

DBA Online |

₹5,50,000 |

|

|

DBA Online |

₹7,50,000 |

|

|

DBA Online |

₹7,00,000 |

Note: The fees might vary; it is recommended to check it with the universities.

FAQs (Frequently Asked Questions)

The Transformer, proposed by Vaswani et al. in Attention Is All You Need (2017), is the fundamental architecture behind contemporary LLMs and multimodal models. It employs self-attention over recurrent mechanisms, allowing parallelizable processing for applications such as translation, text generation, and image comprehension.

The GPT‑3 research (Language Models are Few‑Shot Learners, Brown et al., 2020) demonstrated that large language models (175B parameters) are able to execute diverse tasks from a few examples without task‑specific fine‑tuning.

GANs (Goodfellow et al., 2014) provided a game‑theoretic setup in which a generator and discriminator play against each other, resulting in very realistic image synthesis.

DCGAN (Radford et al., 2015) used convolutional layers in GANs, which stabilized training and greatly enhanced image quality of generated images

The Diffusion Models: A Comprehensive Survey (2022) points out that diffusion methods now surpass GANs in image diversity and fidelity, with models such as DALL·E 2, Imagen, and Stable Diffusion leading the way.

- CLIP (Radford et al., 2021) learns representations of images from large-scale image-text pairs.

- DALL·E (2021) and GLIDE/DALL·E 2 apply diffusion or autoregressive models to transform text prompts into high-fidelity images

- Flamingo and BLIP-2 (2022) advance vision-language conversation and instruction-following ability.

AlphaFold2 (2021) applies attention-based deep learning to forecast protein structures with near-experimental accuracy, transforming bioinformatics and drug discovery.

The new AI Scientist architecture (2024) demonstrates AI agents that can ideate, code, experiment, and review themselves—writing papers independently, accelerating scientific discovery in ML.

Artificial muses (2023) discovered that contemporary chatbots (e.g., GPT‑4) can equate human creativity in ideation tasks, with only ~9% of humans beating the models.

Studies such as Flamingo, PaLI, BEiT‑3, CLIP, and MM1 (2021–2023) are converging modalities—text, image, audio—and resulting in models that can see, hear, and talk, greatly improving generalized reasoning across domains.

By Komal Jain

5 Years of Experience / Storyteller / Research-driven Writer

Passionate about digital marketing with a creative flair for content creation.Experienced Always eager to learn, grow, and make a meaningful impact in the digital space.

Every query is essential.

Our team of experts, or experienced individuals, will answer it within 24 hours.

Recommended for you

Tired of dealing with call centers!

Get a professional advisor for Career!

LIFETIME FREE

Rs.1499(Exclusive offer for today)

Pooja

MBA 7 yrs exp

Sarthak

M.Com 4 yrs exp

Kapil Gupta

MCA 5 yrs exp

or

Career Finder

(Career Suitability Test)

Explore and Find out your Most Suitable Career Path. Get Started with our Career Finder Tool Now!

ROI Calculator

Find out the expected salary, costs, and ROI of your chosen online university with our free calculator.